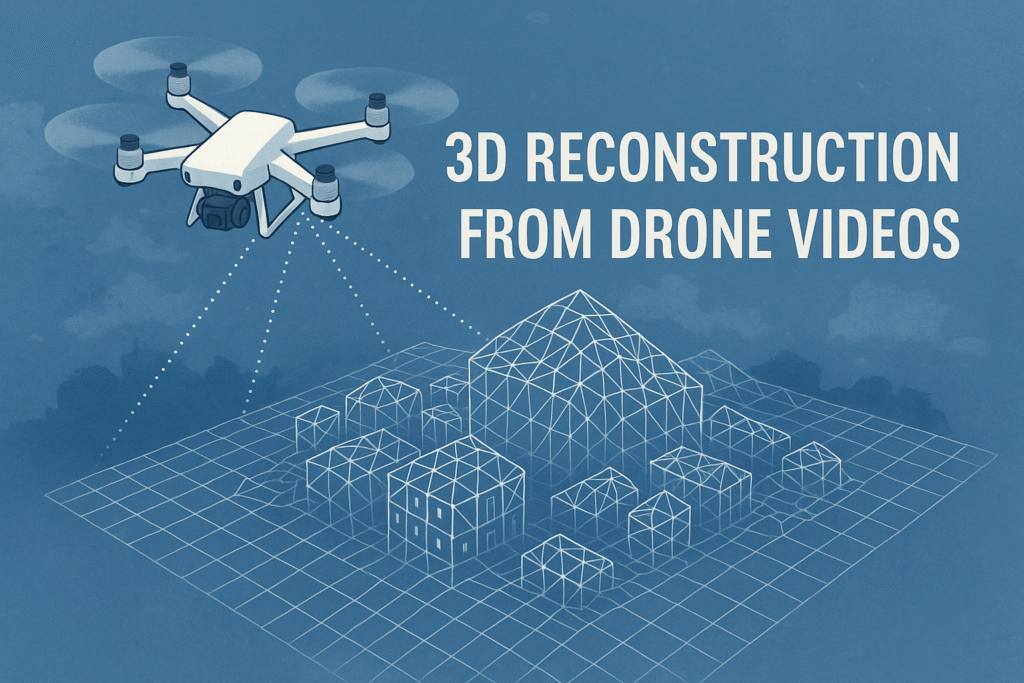

Drones don’t just capture beautiful videos — they give us the ability to rebuild the real world in three dimensions. But how does a simple 2D video, recorded by a moving camera, turn into a full 3D model of buildings, landscapes, or entire towns? Understanding the answer requires looking at the fundamental principles that convert flat images into spatial structure.

Every image is a flattened version of the 3D world

When a drone takes a picture, the camera captures the world by projecting it onto a flat surface. In this projection, depth disappears, and only position, color, and shape remain. A single image cannot tell us how far objects are from the camera. However, when the same scene is viewed from multiple angles, the differences between those views give clues about the missing third dimension.

Drone movement reveals depth

As a drone moves through the air, objects in the video shift at different speeds depending on how far they are from the camera. Nearby objects appear to move more quickly across the frame, while distant objects move more slowly. This shifting, called parallax, is the same phenomenon that allows our two eyes to perceive depth. By analyzing these shifts across frames, it becomes possible to infer how far each part of the scene is from the camera.

Identifying the same point across multiple frames

To reconstruct a 3D scene, computers search for recognizable points that appear in several frames, such as the corner of a roof or a unique texture on the ground. Once a point is matched across different images, its movement from one frame to another provides information about its position in space. This process forms the foundation of Structure-from-Motion (SfM), a technique that uses the movement of the camera to recover the motion and structure of the scene simultaneously.

Structure-from-Motion recovers motion and shape together

SfM determines where the drone was when each frame was captured and what the 3D structure of the environment looks like. By combining these two unknowns, SfM produces a sparse set of 3D points that outline the shape of buildings, terrain, and other objects — similar to a wireframe model. Although this sparse reconstruction captures the basic geometry, more detail is required to create a full model.

Triangulation reveals 3D point locations

To find the exact position of a point in three-dimensional space, the system relies on triangulation. This involves tracing rays from the camera through the matching points in the images. Where the rays intersect in space gives the position of that point in 3D. Repeating this process for thousands of points forms a basic 3D representation of the scene.

From a skeleton of points to a detailed model

A sparse collection of points is not enough to understand fine details. Multi-View Stereo (MVS) builds on SfM by examining every pixel across many frames and estimating its depth by checking how consistently it appears from different angles. This produces a dense point cloud containing millions of points, resulting in a richly detailed representation of the environment.

From points to surfaces and textures

Once a dense point cloud is created, it needs to be turned into a smooth, continuous surface. The computer connects nearby points into a mesh made of triangles and then applies colors and textures from the original drone images onto that surface. The result is a realistic 3D model that resembles the real world in both shape and appearance.

Aligning the model with the real world

If the drone provides GPS coordinates, altitude, or orientation data, the reconstructed 3D model can be aligned with actual geographic locations on Earth. This process, called georeferencing, ensures that the model fits correctly into mapping systems, GIS platforms, simulations, or digital twins.

How AI is improving reconstruction

Recent advances in artificial intelligence have made 3D reconstruction even more powerful. Techniques such as Neural Radiance Fields (NeRF) and machine-learned depth estimation can fill in missing details, smooth out noisy regions, and generate more realistic surfaces and lighting. NeRF, in particular, models the entire scene as a continuous volume of light and color, allowing for highly photorealistic reconstructions.

Final takeaway

3D reconstruction from drone videos works by using multiple viewpoints to recover depth, tracking how points move across frames, applying geometric principles to locate those points in space, and refining the shape using more detailed analysis. The end result is a complete digital copy of a real environment, created entirely from video footage. This combination of motion, geometry, and modern computer vision makes it possible to recreate the world in remarkable detail using only a drone and a camera.